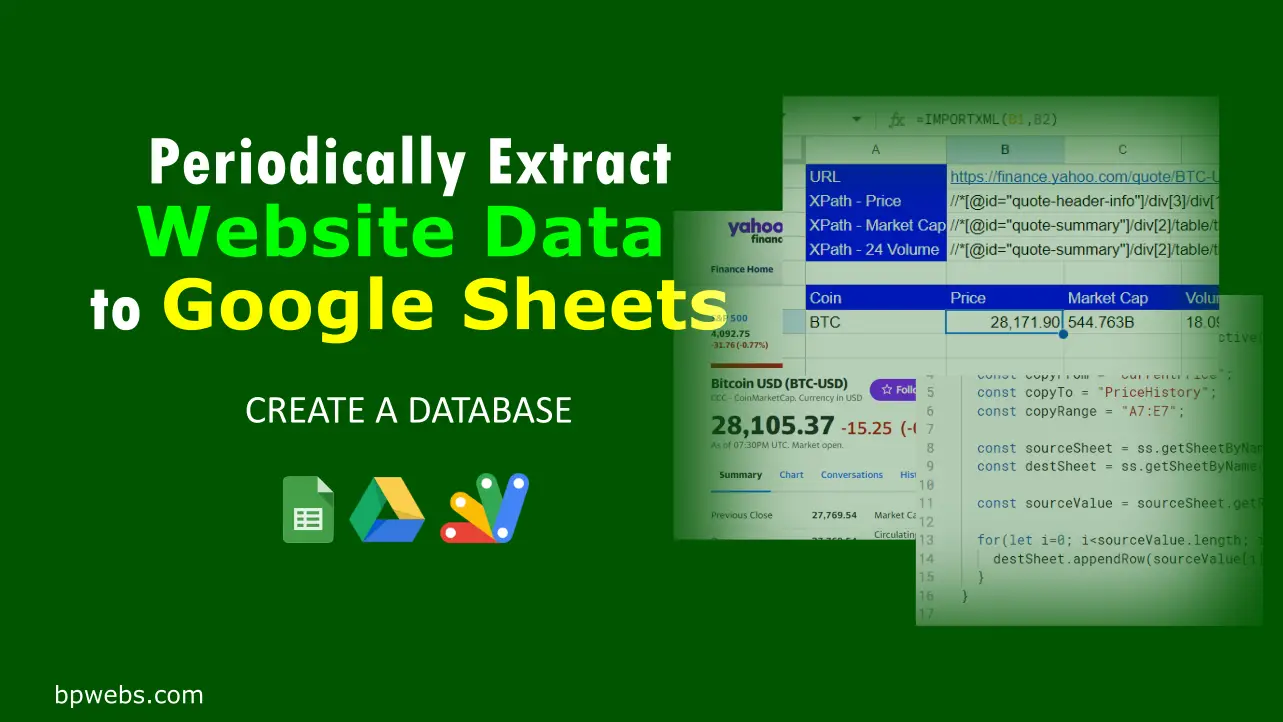

Nowadays, we have access to an abundance of data that can aid us in decision-making. Website data is a particularly accessible and cost-effective source, provided that we utilize appropriate data collection techniques and tools. Google Sheets is one such tool that can be used for free. In this article, I will demonstrate how you can periodically extract website data to Google Sheets.

By utilizing this method, you can streamline the process of gathering live data from websites and storing it directly into Google Sheets. By doing so, you will have the ability to access historical data for use in your data analysis projects. This automated approach can save time and effort, as you no longer need to manually collect data from various sources. Instead, the data will be continuously updated and readily available for your analysis needs.

Table of Contents

Why extract Website data

There are many reasons why you might want to extract website data. For instance, you may want to gather information for research or marketing purposes, or simply keep track of important information. By extracting website data, you can obtain valuable insights that can help you make informed decisions and improve your online presence. Additionally, website data can provide a wealth of information that may be difficult or time-consuming to obtain through other means.

However, the process of extracting data can be time-consuming, especially if it involves manually copying and pasting data from multiple sources. This is where automation comes in.

Why Extract Website Data to Google Sheets?

There are several advantages of using Google Sheets for data collection works.

Google Sheets is a popular cloud-based spreadsheet tool that allows users to create, edit, and share spreadsheets online. It offers a range of features such as data analysis, visualization, collaboration, and automation. Using Google Sheets to extract data from websites, businesses can benefit in the following ways:

Centralized Data Storage: With Google Sheets, businesses can store website data in a centralized location, accessible to multiple users from anywhere. This means teams can collaborate and work on the same data without the need to send multiple versions of a file back and forth.

Automate Data Collection Process: Google Sheets supports Google Apps Script, a powerful tool that enables users to automate their data collection process. By automating data collection, businesses can save time and reduce the risk of human errors. Moreover, since the Google Apps Scripts are run on Google Servers, users do not need to keep their computer on full-time to run the script and collect data.

Real-time Data Updates: By automating data collection, businesses can receive real-time updates on their website data. This enables them to make informed decisions quickly and promptly respond to market or customer behavior changes.

Data Visualization: Google Sheets offers several data visualization options, which can help businesses gain insights from their data. For instance, businesses can use Google Sheets to create charts, graphs, and pivot tables. Additionally, businesses can connect Google Sheets to Looker Studio, a data visualization tool, to create stunning dashboards that can be shared with others.

How to Extract website data to Google Sheets

In a previous post, we discussed several methods that you can use to import data from websites to Google Sheets. You can read that blog post from the following link.

How to import data from websites to Google Sheets.

I take the IMPORTXML method explained in the abovementioned article to go forward with this article.

Watch the following video or continue reading.

The IMPORTXML function of Google Sheets has three parameters.

IMPORTXML(url, xpath_query) URL - The URL of the web page that contains the HTML table XPATH_QUERY - The XPath query to run on the structured data LOCALE - A language and region locale code to use when parsing the data. If unspecified, the document locale will be used.

For example, let’s say you need to extract cryptocurrency prices into your Google Sheets at selected intervals for your own analysis.

To do this, first, you need to import live crypto updates to your Google Sheets. You can use the IMPORTXML function as explained below.

Getting the URL,

The first step to extracting website data is to identify the web page containing the data you want to extract. To do this, navigate to the web page and copy the URL from your browser’s address bar. For example, let’s say we want to extract data from Yahoo Finance;

- Go to the Yahoo Finance Cryptocurrencies page and click on the cryptocurrency you need to get updates, in this case, I chose Bitcoin.

- Copy the URL from the browser.

- Insert the URL into cell B2

Getting the xpath_query,

Once you have the URL, the next step is to identify the specific data you want to extract using an XPath query. An XPath query is a path to a specific element on the web page containing the data you want to extract. To get the XPath;

- Right-click on the bitcoin price, and select inspect. This will open up the Developer Tool of your browser. You will be landed on the text value of the Bitcoin price. you can confirm it by checking it with the bitcoin price or hovering the mouse over the code.

- Then right-click on the text value and then go to Copy > Copy XPath.

- Insert the XPath into cell B3.

Then you can use them in the IMORTXML function as below.

=IMPORTXML(B2,B3)

Where;

Cell B2 contains, https://finance.yahoo.com/quote/BTC-USD

Cell B3 contains, //*[@id="quote-header-info"]/div[3]/div[1]/div/fin-streamer[1]Once you import this formula into any cell in the same tab which contains the URL and XPath, you will get the current BTC price.

Similarly, you can do this for, Market Cap, 24h Volume, etc.. as well as other cryptocurrencies.

How to store extracted data in Google Sheets periodically

In the previous section, we discussed importing current cryptocurrency prices to Google Sheets. However, that only displays the prices at the time you view the sheet. To keep a record of previous data, we need to create a Google Apps Script that copies these values to a separate sheet at our desired intervals.

To write a Google Apps Script, open the script editor by going to “Extensions” and then selecting “Apps Scripts”.

Then replace the default code in the script editor with the following.

You need to change the variables copyFrom, copyTo and copyRange according to your Google Sheets.

The above Google Apps Script copies the data in the range you defined for the copyRange variable and appends it to the copyTo (priceHistory) sheet. If you have defined multiple rows to the copyRange, this code iterates through each row and appends them to the copyTo (priceHistory) sheet.

Set triggers to run the script automatically

Once you have run the Apps Script as described above, it will copy the data from the currentPrice sheet and append it to the priceHistory sheet. To avoid having to run the script every time manually, we can set up Time-Driven Triggers to automatically run the script at specified intervals.

To set up Time-Driven Triggers, follow these steps:

- Open the Apps Script IDE and click on the Triggers menu (the clock icon).

- Click the “Add Trigger” button located in the bottom right corner.

- Choose the “copyData” function to run.

- Select “Time-driven” as the event source.

- Select the type of time-based trigger that you want to use (e.g. Hour timer).

- Select the desired interval (e.g. every 2 hours).

- Click “Save” to set up the trigger

Once the trigger is set up, your script will automatically run at the specified intervals, copying the current price and appending it to the priceHistory sheet. With this automation in place, you can save time and avoid the risk of copying the data manually.

You can make a copy of the above Google Sheets from the following link.

How to customize this Google Sheet extract data from your websites

In the above example, extracted data to Google Sheets from yahoo finance. You can follow the same procedure to extract data from your preferred websites.

The Apps Script above is written only to copy the data in a given range to another Sheet (tab). So, you can make any changes to the first sheet (the CurrentPrice sheet in the above example). Then you only need to update the range you need to copy to the second Sheet in the Apps Script. To do this,

- First, make a copy of the Google Sheets from the link given above to your Google Drive

- Find the website URL and XPath to the specific data you need to extract

- Replace the URL and XPaths in the Google Sheets with your ones, or write the IMPORTXML function anywhere you prefer.

- Then, open Script Editor from Extension > Apps Script

- Then update the copyRange (in line 6) variable as per your changes done in step 3 above. This is the range you need to copy to the second sheet periodically.

- Change the Sheets name in lines 4 and 5 accordingly.

- Then run the scripts to test it.

- Then set up the triggers as explained above.

Recalculation times of functions that pull data from outside the Google Sheets

According to Google, Functions that pull data from outside the spreadsheet recalculate at the following times:

- ImportRange: 30 minutes

- ImportHtml, ImportFeed, ImportData, ImportXml: 1 hour

- GoogleFinance: may be delayed up to 20 minutes

So, the above method is suitable for pulling data in intervals larger than one hour.

How to pull data with higher frequency

The IMPORTXML function may encounter obstacles in scraping data from websites, including website structure, authentication, anti-scraping measures, JavaScript content, and detection of scraping tools. Additionally, several factors can contribute to data retrieval delays, such as network speed, website response time, the complexity of the XPath query, the number of queries, and the use of additional functions such as FILTER, SORT, etc…

Alternatively, some websites provide APIs that allow users to access their data. Some APIs are free while others may require payment based on the number of API calls. By utilizing these APIs in conjunction with Google Sheets and Google Apps Script, users can effortlessly extract data from these websites.

For example, in our “Create a Crypto Portfolio Tracker in Google Sheets” post, we employed CoinMarketCap API to extract crypto prices to Google Sheets. This post demonstrates how to use Google Apps Script and APIs to extract data to Google Sheets.

Wrapping Up

Websites can provide valuable data for various types of analysis, but not all of them offer the option to download historical data. This can make the process of manually copying data into a spreadsheet time-consuming and prone to errors. However, there is a more efficient way to collect this data using Google Sheets and Google Apps Script. In this blog post, you’ll learn how to automate the data collection process to extract website data to Google Sheets to ensure accuracy and save time.